Docker

Introduction

Open Container Initiative

- Runtime Specification - How a image is converted to container and run

- Image Specification - What configuration in what format a image file should be written

- Distribution Specification - How the images should be distributed /shared. Like, container registry, docke hub etc..

Containers

- Shared Kernel

- No dependency conflicts

- Isolated

- Faster startup and shutdown

- Faster provisioning and decommissioning

- Lightweight enough to use in development!

Linux Kernel Internals

- Name spaces

- Cgroups ( Control memory and cpu allocation / priorities )

- overlayfs file system

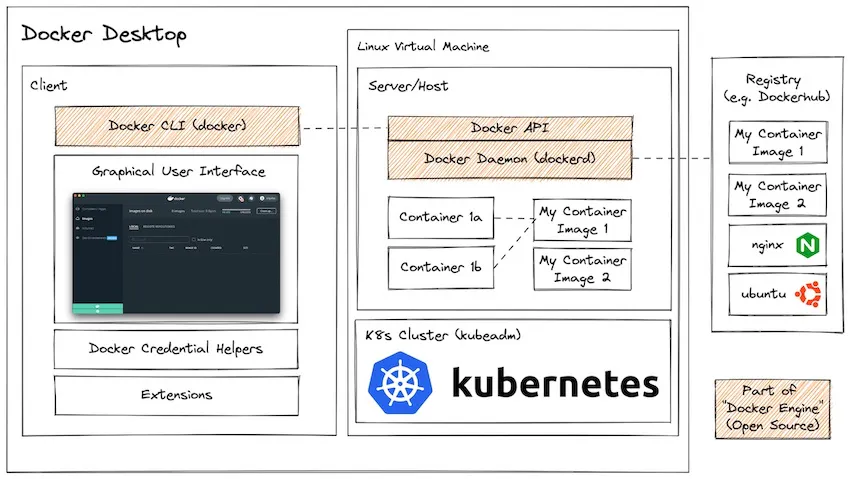

Docker Desktop Creates a Linux VM to host docker containers in Windows and Mac OS, for Linux, since it's already a linux host new Linux VM is not created.

In the host system, docker container related files are in /var/lib/docker folder

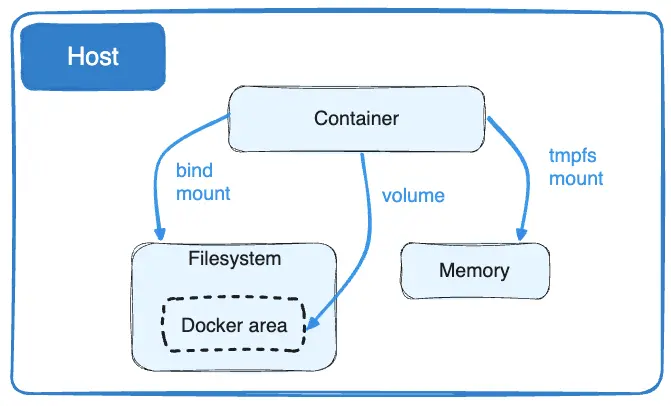

Data within Containers

- By default all data created or modified in containers is short lived

- If some data should be present every time a container image is run (e.g dependency), it should be built into the image itself

- if data is generated by the application that needs to be persisted, a volume should to store that outside of the ephemeral ( short lived ) container filesystem

- bind mount should be used to persist configuration files, Not recommended for application data

- Bind mount can also be used to support hot-reload during development

Building Container Images

👨🍳 Application Recipe:

- Start with an Operating System

- Install the language runtime

- Install any application dependencies

- Set up the execution environment

- Run the application

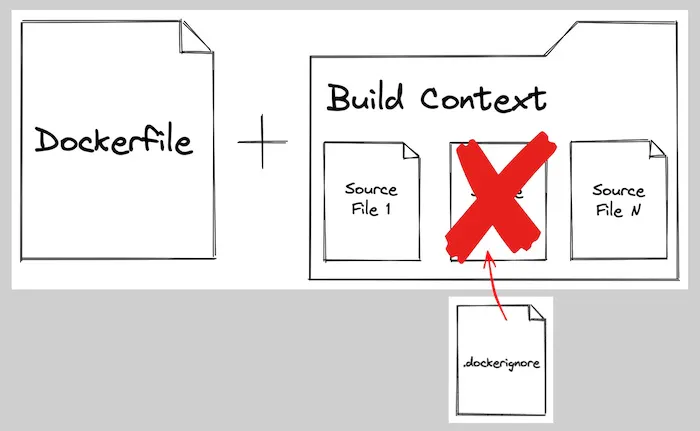

Docker Build Context

Common Build Commands

FROM: Specifies the base layer or operating system for the container image.

RUN: Executes a command during the build phase.

COPY: Copies files from the build context (e.g., your local system) to the container image.

CMD: Provides a command to be executed when the container starts.

A hash (#) is used for comments. Instructions are written in all caps, followed by arguments.

Dockerfile

A image spec and configuration file

A Naive Implementation example

FROM node

COPY . .

RUN npm install

CMD [ "node", "index.js" ]Improvements

- Pin the base image to improve security and build time

- Set a working directory for clarity

- Copy dependencies files before source code and install dependencies. This will cache dependency installation layer while performing subsequent builds. While copying it with source code and getting dependencies afterwards, changes in source code will cause dependency fetch everytime the image is built. But, source code change doesnot always means the dependencies are also changed

- Use a non root user for security

- Configure for production environment ( No debugger, no dev dependencies )

- Add useful metadata

- Use a cache mount to speedup dependency installation ( Buildkit feature )

- Use multi-stage build

A good implementation example

#-------------------------------------------

# Name the first stage "base" to reference later

FROM node:19.6-bullseye-slim AS base

#-------------------------------------------

LABEL org.opencontainers.image.authors="sid@devopsdirective.com"

WORKDIR /usr/src/app

COPY package*.json ./

#-------------------------------------------

# Use the base stage to create dev image

FROM base AS dev

#-------------------------------------------

RUN --mount=type=cache,target=/usr/src/app/.npm \

npm set cache /usr/src/app/.npm && \

npm install

COPY . .

CMD ["npm", "run", "dev"]

#-------------------------------------------

# Use the base stage to create separate production image

FROM base AS production

#-------------------------------------------

ENV NODE_ENV production

RUN --mount=type=cache,target=/usr/src/app/.npm \

npm set cache /usr/src/app/.npm && \

npm ci --only=production

USER node

COPY --chown=node:node ./src/ .

EXPOSE 3000

CMD [ "node", "index.js" ]Running Docker Container

There are two ways to run a docker container

- Create a docker-compose.yml file and do

docker compose up - Use

docker runcommand directly

We can run multiple containers with docker compose at the same time also it manages container life cycle nicely than the docker run option.

Commands Examples

docker run --env POSTGRES_PASSWORD=foorbar --publish 5432:5432 postgres:15.1-alpinedocker run docker/whalesay cowsay "Hello, World"docker run --interactive --tty --rm ubuntu:22.04docker start <container-name>docker attach <container-name>- Attach to the shell of running container

docker network lslist all networkdocker network create my-networkcreates a my-network docker networkdocker run --network my-network ubuntu sleep 99Run container in the custom created network

Most Used Options

-ddetach and run in background--entrypointoverride entrypoint defined in dockerfile--env, -e, --env-fileSet environment variables in runtime--initrun docker-init process as main process. If not passed the first command/entrypoint will be process id 1 or main process--interactive --ttyor-itWe get running shell within that container--rmremoves the container automatically after we stop container process--namegive container a name, can't have two container with same name in the system--network <name>, --netAssign network to the container--publish 8080:80, -pis used for mapping port from docker container to host. 8080 is the host port and 80 is container port--platform <linux/arm64/v8>Run in different CPU architecture, uses QEMU under the hood--restart unlesss-stoppedRestart container if crashed--cpu-sharesNo of cpu cycles assigned to the container processes--memory, -mAmount of memory assigned to the container processes--pid, --pids-limitNo of sub processes the container can create and manage--privilegedIgnore all security config and give privileged level permision to container process--read-onlyMake container process read only--link=dbTo usedb, which points to the db container internal network ip

Docker Compose

version:3.9

services:

service1:

image:

build:

context:

dockerfile:

init: true

volumes:

- host_path/volume:container_path # Bind mount

- type: bind # Another way

source: path

target: path

networks:

- network1

ports:

- 5173:5173

service2:

image:

build:

context:

dockerfile:

target: stagename # Build a specific stage only when using multistage build

init: true # Good for security

depends_on:

- service1 # Starts service1 Before starting this

environment:

- Key=Value

networks:

- network1

- network2

ports:

- 3000:3000

restart: unless-stopped

service3:

image:

volumes:

- volume1:container path # Volumes

environment:

- Key=Value

networks:

- network2

ports:

- 5432:5432

# Create Volumes and Network that are being used

volumes:

volume1:

networks:

network1:

network2:By default bridge network is created.

In the above example,

-

service2 can see both service1 and service3

-

service 1 and service 3 are are not visible to each other

-

docker compose upStart all containers and attach to them all -

docker compose up -dStart all container as daemon or detach -

docker compose downStop all containers

NOTE: We can use multiple docker-compose.yml file with docker compose up command. In this case, the later file will override the configuration on previous file. So, only the configuration that need to be override can be put into the new docker-compose.yml file.

Container Security

Image Security

- Use minimal base image ( chainguard.dev )

- Scan images

docker scout cves image-tag - Use users with minimal permissions

- Keep sensitive info out of images

- Sigh and verify images

- Use fixed image tags ( pin major.minor versions )

Runtime Security

- Start docker daemon with

--userns-remap - Use

--read-onlyif no write access is required --cap-drop=all, then--cap-addanything you need- Limit CPU's and memory

- Use

--security-opt

Deploying to Production

COOL CONCEPT: You can specify the host where docker engine is running to all docker commands applies to that host. It is possible using ssh.

Just do,

export DOCKER_HOST=ssh://user@ip

Remember: You should setup your SSH key in the server to make this work

Things to keep in mind

- Security

- Ergonomics / Developer Experience

- Scalability

- Downtime

- Observability

- Persistent storage configuration

- Cost

Why not use Docker Compose for Deployment

- No way to deploy without downtime

- No way to handle credentials

- Can only be used on a single host

Docker Swarm

docker swarm initEnable swarm mode in the hostdocker stack deploy -c docker-swarm.yml stack_nameDeploy containers to docker swarm, docker-swarm.yml and docker-compose.yml are nearly identicaldocker stack rm stack_nameTo remove stackdocker service lsTo list all container services and information about replicasdocker secret createTo create secret in the docker host machine

In the yml file, add following lines

secrets:

database-url:

external: true

postgres-passwd:

external: true # Means we dont havve to pass secret here in compose file, its in docker secret alreadysThe created secrets will be available to the application through a file. So, application should read the file to get the secret value.

environment:

- DATABASE_URL=/run/secrets/database-url

secrets:

- database-url # Tell application that we'll consume itWe can also add deployment configuration to specify no of replicas, and how the updates should be handled

service:

deploy:

mode: replicated

replicas: 2

update_config:

order: start-first # start new container before stopping old oneAdding healthcheck will tell the docker engine that the running container is healthy or not.

While performing updates, the new container won't accept traffic and old containers wont get deleted until the new container status is healthy.

service:

healthcheck:

test: ["CMD", "Put a test command here"]

interval: 30s

timeout: 5s

retries: 3 # 3 retries before declaring the status unhealthy

start_period: 10s # Performs first health check after 10 seconds container startsDocker Network

bridge: The default network driver.host: Remove network isolation between the container and the Docker host.noneCompletely isolate a container from the host and other containers.overlayOverlay networks connect multiple Docker daemons together.ipvlanIPvlan networks provide full control over both IPv4 and IPv6 addressing.macvlanAssign a MAC address to a container.

Docker Volumes

Volumes are stored in a part of the host filesystem which is managed by Docker (/var/lib/docker/volumes/ on Linux). Non-Docker processes should not modify this part of the filesystem. Volumes are the best way to persist data in Docker.

Bind mounts may be stored anywhere on the host system. They may even be important system files or directories. Non-Docker processes on the Docker host or a Docker container can modify them at any time.

tmpfs mounts are stored in the host system's memory only, and are never written to the host system's filesystem.